RAG → Agentic RAG → Agent Memory : Smarter Retrieval, Persistent Memory

Table of Contents

Introduction

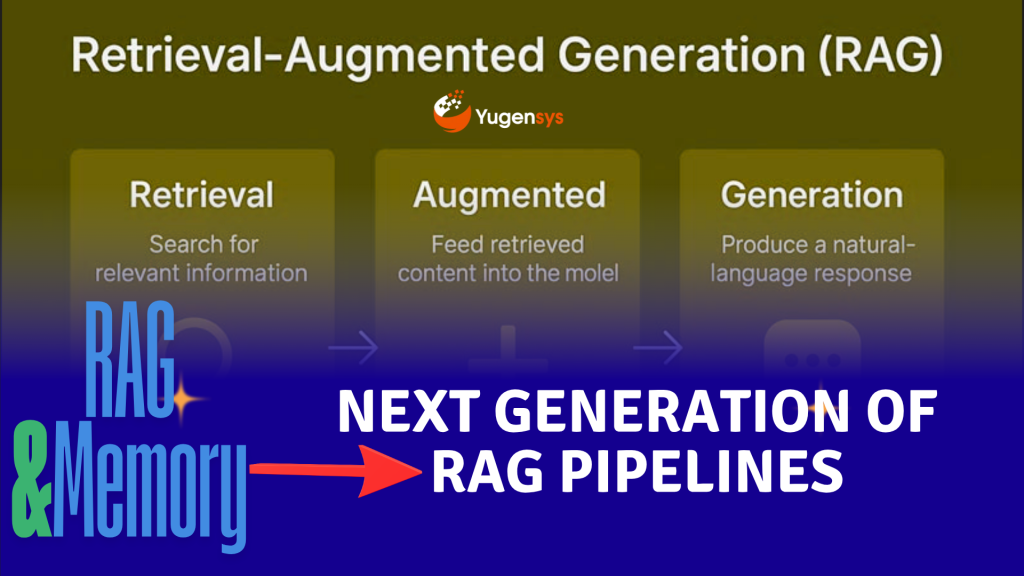

The evolution of RAG systems is entering a new and more dynamic phase. What started as a simple retrieve-and-generate pattern has expanded into architectures capable of reasoning when to retrieve, what to retrieve, and now—what to remember. This shift isn’t just about adding smarter tools; it reflects a fundamental redesign of how AI systems manage information. From static, read-only RAG to adaptive, read–write agent memory, the landscape is rapidly transforming. This blog explores each stage of this progression, why it matters, and how it shapes the next generation of AI systems.

The current evolution of RAG systems

The shift from RAG → Agentic RAG → Agent Memory isn’t merely about stacking new features. It represents a fundamental change in how information is routed, processed, and retained within an AI system.

RAG: Read-Only, Single-Pass Retrieval

Classic RAG works like a “read-only” library.

You query a knowledge base, retrieve relevant documents, and generate a response from that static snapshot.

- The data store is updated offline.

- Retrieval happens at inference time.

- No state is carried forward.

It works well for fixed knowledge, but the interaction model is rigid and stateless.

Agentic RAG: Intelligent Retrieval Control

Agentic RAG keeps the system read-only, but adds a layer of reasoning to the retrieval pipeline.

Now the agent can actively decide:

- Do I need to retrieve anything?

- Which source should I pull from?

- Is the returned context actually useful for the current task?

This is still retrieval-based, but the system is far more deliberate about when and what it reads.

The intelligence shifts from “retrieve everything always” to “retrieve strategically.”

Agent Memory: Read–Write Knowledge Interaction

Agent memory introduces write operations during inference, making the system stateful and adaptive.

The agent can now:

- Persist new information from conversations

- Update or refine previously stored data

- Capture important events as long-term memory

- Build a personalized knowledge profile over time

Instead of only pulling from a static store, the system continuously builds and modifies its own knowledge base through interaction.

Your assistant doesn’t just recall your preferences — it learns them.

The agent gains capabilities for storing, modifying, and consolidating memory elements, enabling dynamic knowledge growth.

Where is this memory actually stored?

A core enabler of modern RAG and agent-memory architectures is the vector database. Systems like pgvector, pinecone, weaviate, and milvus store high-dimensional embeddings that represent knowledge in a form machines can retrieve semantically. These databases support fast similarity search, incremental updates, and multi-tenant collections—making them ideal for adaptive AI systems that need to read, write, and reorganize knowledge over time. As agent memory evolves, vector stores become the backbone that allows agents to persist new information, update context, and maintain long-term state efficiently.

Real-World Complexity

While this framing is useful, it’s still a simplified mental model.

Production-grade memory systems must handle:

- What should be remembered vs. discarded

- Memory decay or forgetting strategies

- Conflict resolution and data correction

- Long-term vs. short-term context separation

Managing memory is significantly more complex than the diagrams suggest.

Different Memory Types

The article also highlights that memory isn’t monolithic. Systems may maintain multiple memory stores:

- Procedural: behavioral rules (e.g., “always respond with emojis”)

- Episodic: user-specific events (“trip mentioned on Oct 30”)

- Semantic: factual knowledge (“Eiffel Tower height is 330m”)

Each may require different storage and retrieval strategies.

Why this progression matters

Each step solves a limitation of the previous one:

- RAG was static.

- Agentic RAG made retrieval context-aware.

- Agent Memory made the system adaptive and evolving.

It’s a clear evolution from fixed knowledge → intelligent querying → continuous learning.

Key Takeaways

RAG made AI informed.

Agentic RAG made it strategic.

Agent Memory will make it adaptive.

As the Tech Co-Founder at Yugensys, I’m driven by a deep belief that technology is most powerful when it creates real, measurable impact.

At Yugensys, I lead our efforts in engineering intelligence into every layer of software development — from concept to code, and from data to decision.

With a focus on AI-driven innovation, product engineering, and digital transformation, my work revolves around helping global enterprises and startups accelerate growth through technology that truly performs.

Over the years, I’ve had the privilege of building and scaling teams that don’t just develop products — they craft solutions with purpose, precision, and performance.Our mission is simple yet bold: to turn ideas into intelligent systems that shape the future.

If you’re looking to extend your engineering capabilities or explore how AI and modern software architecture can amplify your business outcomes, let’s connect.At Yugensys, we build technology that doesn’t just adapt to change — it drives it.

Subscrible For Weekly Industry Updates and Yugensys Expert written Blogs